Director Essentials

Governance Surveys

2024 Governance Outlook

The audit committee has many discrete duties, including overseeing financial reporting and related internal controls, the independent and internal auditors, and ethics and compliance, to name just a few. However, these and other duties are part of a broader audit committee responsibility: risk oversight. While the audit committee does not manage all risks, it is responsible for overseeing the procedures and processes by which the company anticipates, evaluates, monitors, and manages risks of all types.

Recent developments in artificial intelligence (AI), including the emergence of generative AI, are leading businesses to evaluate AI’s potential impact to their business technology strategy. As businesses expand their use of AI, especially into core business processes, the audit committee will need to understand the challenges and opportunities presented by AI to address risks related to governance and stakeholder trust.

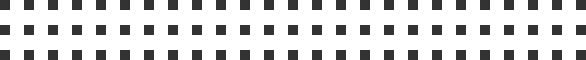

According to a 2023 survey conducted by Deloitte and the Society for Corporate Governance, corporate secretaries see AI strategy and oversight as still evolving. The findings show that few respondents (13%) had a formalized AI oversight framework, although many (36%) were considering the development and implementation of AI oversight policies and procedures.

These results are particularly interesting when compared to a 2022 Deloitte survey, in which 94 percent of respondents said AI was critical to their company’s short-term success.1 This may suggest some level of information asymmetry between management and the board, congruent with the notion that AI is in a state of flux. Thus, at least for now, the AI landscape might best be characterized as an abstract governance puzzle.2

FAMILIAR AND DIFFERENT SET OF RISKS

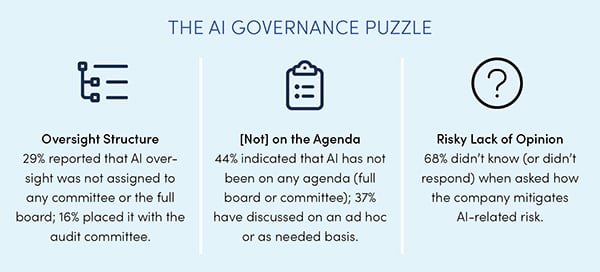

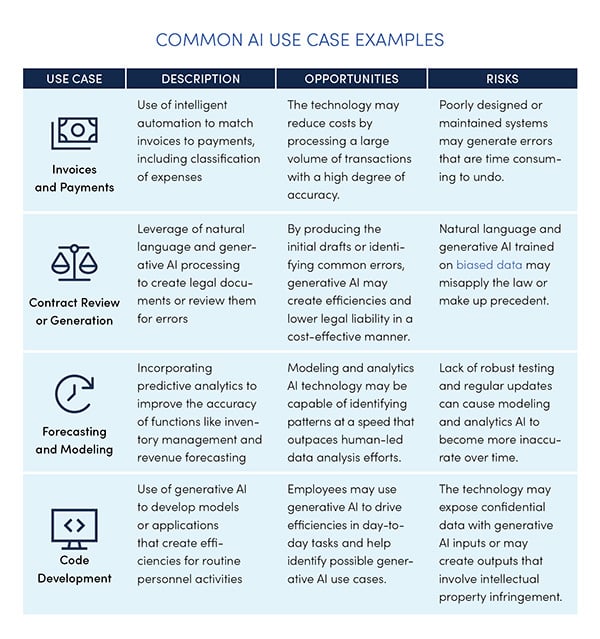

With new technology comes the possibility of new risks. Some AI risks present well-trodden challenges that arise in other technology areas and can be overseen and understood in the context of an ongoing enterprise risk management (ERM) process,3 such as the COSO ERM framework. However, other risks may be unfamiliar and/or amplified. A few illustrative examples are highlighted below.

Finding the appropriate balance between AI’s benefits and risks depends on a constellation of factors. Outputs produced by generative AI change over time as the technology learns from data. But just like with humans, it is possible for this subcategory of AI technology to learn things that are incorrect. For that reason, traditional risk management strategies may not be well-equipped for the challenges that arise from generative AI use.

Regardless of whether the risk is familiar, completely new, and/or amplified, the resultant consequences may be notable. Failure to mitigate any subcategory of AI-related risks may lead to many adverse outcomes such as reputational damage, financial losses, legal action, and regulatory infractions. A starting point for addressing such concerns might include using mitigation strategies that are already known to work in other contexts, such as the COSO ERM framework referred to earlier. For AI-centric guidance related to implementation and scaling, it may be worth considering the benefit of systems such as the NIST AI Risk Management Framework.

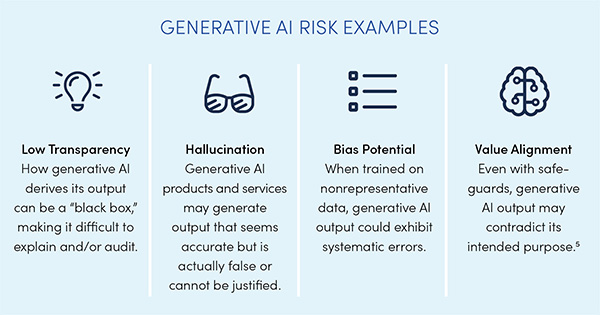

WITH RISKS COME BENEFITS, TOO

If AI presented nothing but risk, it seems unlikely that it would have emerged as “the” technology of the future. Clearly, AI has benefits, some of which may not be known for some time. One particular set of benefits is squarely in the audit committee’s wheelhouse—namely, the potential to streamline and enhance a company’s internal audit, financial reporting, and internal control functions. There are also aspects of generative AI technology that, while still evolving, may one day fundamentally change an organization’s financial systems. While there is much uncertainty, the future transformative potential of generative AI may add much to the current array of use cases. In the shorter term, various subcategories of AI are already capable of improving the quality of financial reporting via reviewing transactions, identifying errors, addressing internal control gaps, and detecting fraud. If AI isn’t being used within these areas, the audit committee might ask if the company is exploring potential use cases—and if not, why not.

The tendency to assign oversight of emerging risks to the audit committee means it is sometimes described as the “kitchen sink” of the board. However, as noted earlier, this is consistent with the audit committee’s overarching role in risk oversight. It’s also worth considering that it is common for topics taken on by the audit committee at the outset to eventually be overseen by other committees. Some aspects of AI oversight seem more aligned with the audit committee’s work than others. And when it comes to considering such congruence questions, it may be helpful to think about the audit committee’s current levels of technology fluency and comfort. For instance, given the audit committee’s traditional governance areas, it may be prudent for it to oversee AI use in financial reporting.6

In other parts of AI oversight, it may be less clear whether the audit committee is a “good fit.” For example, the impact of generative or natural language AI on the workforce may be more aligned with the oversight of the compensation/talent committee or the full board.

The “temporary assignment” of AI to the audit committee may make sense for other reasons, as well. First, AI remains an emerging technology and is likely to continue to change rapidly. Second, there is extensive governmental interest in AI, which may result in legislation that will require adjustments in its oversight. Thus, determining now that AI, or aspects of AI, should be overseen by another committee or committees may turn out to be premature.

An audit committee might choose to assess its AI risk tolerance across oversight areas such as auditing, financial reporting, and internal control functions. It may be helpful to contextualize that analysis by comparing it to other areas of the company. For example, company divisions that routinely use technology enhancements in client-facing operations may have a higher appetite for risk. But a higher risk tolerance in operational settings does not necessarily correlate with how risks are viewed when it comes to financial reporting impacts.

An important part of the AI governance puzzle for the audit committee is assessing risk. But, at least for now, this task is currently made more difficult by a shifting regulatory landscape. Governments and regulators around the world are considering whether regulation and policy can address AI risks. Their progress toward developing and enacting policies and regulations over AI is uneven across the globe and in different stages of development and enactment. And to make things more complex, stakeholder groups—shareholders, customers/clients, employees, suppliers, and community—all have varying and sometimes conflicting expectations around use and governance of AI. For these reasons, there may be a benefit to continuously assessing AI risks and benefits over waiting for emerging and future legislative proposals or regulatory guidance. But to accurately make such continual assessments, it’s important that the audit committee and the board have sufficient knowledge to ask questions around the organization’s adoption and use of AI.

NOTES:

1 Business leaders were defined as company representatives who met one or more of the following qualifiers: (1) responsible for AI technology spending or approval of AI investments, (2) responsible for the development of AI strategy, (3) responsible for implementation of AI technology, (4) acting as AI technology subject-matter specialist, or (5) otherwise stated they were influencing decisions around AI technology. See Nitin Mittal, Irfan Saif, and Beena Ammanath, Fueling the AI transformation: Four key actions powering widespread value from AI, right now, State of AI in the Enterprise, 5th Edition report, Deloitte, October 2022.

2 Natalie Cooper, Bob Lamm, and Randi Val Morrison, “Future of tech: Artificial intelligence (AI),” Board Practices Quarterly, Deloitte, August 2023.

3 Alexander J. Wulf and Ognyan Seizov, “‘Please understand we cannot provide further information’: Evaluating content and transparency of GDPR-mandated AI disclosures,” AI & Society (2022).

4 Christian Heinze, “Patent infringement by development and use of artificial intelligence systems, specifically artificial neural networks,” in A Critical Mind: Hanns Ullrich’s Footprint in Internal Market Law, Antitrust and Intellectual Property, eds. Christine Godt and Matthias Lamping, MPI Studies on Intellectual Property and Competition Law, vol. 30 (Heidelberg, Germany: Springer, 2023), pp. 489–515.

5 Vic Katyal, Cory Liepold, and Satish Iyengar, “Artificial intelligence and ethics: An emerging area of board oversight responsibility,” On the Board’s Agenda, Deloitte, 2020.

6 The audit committee may want to also think about indirect impacts. Depending on the use case, AI technology may have an array of indirect effects on financial measures (GAAP or otherwise).

Brian Cassidy is an Audit & Assurance partner with Deloitte & Touche LLP and the US Audit & Assurance Trustworthy AI leader.

Ryan Hittner is an Audit & Assurance principal with Deloitte & Touche LLP and the US Artificial Intelligence & Algorithmic Assurance coleader.

Krista Parsons is an Audit & Assurance managing director with Deloitte & Touche LLP. She is also the Governance Services coleader and the Audit Committee Program leader for Deloitte’s Center for Board Effectiveness.

This publication contains general information only and Deloitte is not, by means of this publication, rendering accounting, business, financial, investment, legal, tax, or other professional advice or services. This publication is not a substitute for such professional advice or services, nor should it be used as a basis for any decision or action that may affect your business. Before making any decision or taking any action that may affect your business, you should consult a qualified professional adviser. Deloitte shall not be responsible for any loss sustained by any person who relies on this publication.

Deloitte refers to one or more of Deloitte Touche Tohmatsu Limited, a UK private company limited by guarantee (“DTTL”), its network of member firms, and their related entities. DTTL and each of its member firms are legally separate and independent entities. DTTL (also referred to as “Deloitte Global”) does not provide services to clients. In the United States, Deloitte refers to one or more of the US member firms of DTTL, their related entities that operate using the “Deloitte” name in the United States and their respective affiliates. Certain services may not be available to attest clients under the rules and regulations of public accounting. Please see http://www.deloitte.com/about to learn more.